07-23-Daily AI News Daily

AI Daily Brief 2025/7/23

AI Daily Brief|8 AM Update|Aggregated Web Data|Cutting-Edge Science Exploration|Industry Voices|Open-Source Innovation|AI & The Future of Humanity| Visit Web Version

Featured AI Product: GeminiCli2API

Are you tired of being constrained by Google Gemini’s official free API’s strict rate limits? Do you wish to seamlessly integrate Gemini’s powerful capabilities into your favorite third-party apps? Well, GeminiCli2API now brings you the perfect solution!

This project is a clever local proxy that wraps the more lenient Gemini CLI into a standard, OpenAI-compatible API service. This means you can finally break through the official free API’s rate limits, enjoy higher request quotas authorized by your Google account, and freely develop, test, and create, waving goodbye to those annoying “Quota Exceeded” errors!

GeminiCli2API’s real magic, however, lies in its “surgical knife” level of control over system prompts (System Prompt). This is a game-changing feature:

- ✍️ Override: You can set a global “golden prompt” to force all connected applications to use it, ensuring absolute uniformity in AI persona and output style.

- ➕ Append: You can quietly “append” an additional layer of your instructions to the client’s original system prompt, enabling subtle rule adjustments and capability enhancements without the client’s awareness.

- 🔍 Extract & Audit: You can easily log all prompts passing through the proxy, making it super convenient for analysis, debugging, and optimization, or even for building your own high-quality datasets.

With just a few simple configuration steps, you can connect any OpenAI-compatible tool like LobeChat or NextChat to this local “enhanced” Gemini service. GeminiCli2API is not just a proxy; it’s a powerful toolkit in your hands for mastering and taming AI. Go ahead, give it a try!

AI Content Summary

Netflix is leveraging AI for film and TV special effects to significantly cut costs and boost efficiency, while AI coding assistants are revolutionizing software development.

Applications like Pika are enabling general users to easily create professional-grade videos, as AI technology rapidly democratizes.

Cutting-edge research, through breakthroughs like model slimming and robot brains, is paving the way for AI's application in more scenarios.

The open-source model competition is intensifying, with Alibaba's Qwen3 demonstrating high efficiency, and new interaction modes like "Clone Mouse" emerging.

Furthermore, the increasing popularity of AI companions among teenagers is raising societal concerns, highlighting their profound impact on social interaction and emotional cognition.AI Product & Feature Updates

Hollywood’s special effects “magic” is being redefined by code! Film and television giant Netflix has finally lifted the veil, officially admitting for the first time that it has deeply applied generative AI technology in its original series. In the highly anticipated Argentinian series The Eternals, a grand and majestic collapsing building scene no longer relies entirely on traditional and expensive special effects production; instead, AI efficiently generated it. Costs dropped dramatically, and efficiency reportedly soared tenfold! This isn’t just a revolution in film and television production for cost reduction and efficiency improvement; it’s an exciting preview: in the future, astounding visual effects like “de-aging” in big productions might become commonplace, allowing every audience member to enjoy top-tier visual feasts at a more affordable cost.

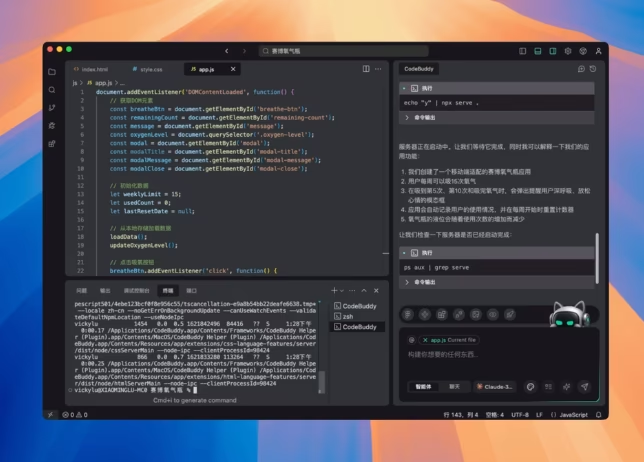

Developers’ work paradigms are being completely reshaped by AI with unprecedented power, and ByteDance and Tencent staged a brilliant “battle of the gods” on the same day! ByteDance’s Trae 2.0 launched a revolutionary SOLO Mode, evolving AI from a mere code completion tool into a “contextual engineer” capable of independently completing the entire process from conception and design to final deployment, truly realizing AI’s autonomous development. At the same time, Tencent launched CodeBuddy IDE - AI News , which brings down the barrier to programming to an absolute low. Users can generate a fully functional full-stack application with a single click, simply by describing their needs in natural language or uploading a design draft. When the technical barrier to writing code is leveled, future software development might transform from a complex engineering challenge into a pure creative expression competition.

Want your selfie to instantly transform into a Hollywood blockbuster protagonist? Now, that dream is within reach! AI video generation leader Pika has officially blown the horn to enter the consumer market, launching an AI video effects app for general users. Users no longer need any professional skills; they can instantly transform into a movie star by simply uploading a regular selfie. They can experience various style conversions, from cyberpunk to vintage film, achieve precise audio lip-syncing, and even customize video scenes as they wish. What’s even more astonishing is that the app can even generate video scripts with one click, completely streamlining the entire process from creative conception to polished final video. This marks the rapid move of AI video creation from the professional domain into everyday households, heralding a creative storm of filmmaking involving everyone.

The battle for open-source large model supremacy has intensified, even evolving into a spectacular “internal China competition.” Less than a week after Chinese company Kimi’s K2 model sparked widespread discussion, another giant, Alibaba’s Qwen3 - AI News team, quickly released a minor update. With only a quarter of its opponent’s parameter count, it surpassed competitors in multiple authoritative benchmark tests, showcasing its impressive model efficiency and optimization prowess. The official word is even more assertive: “Big moves are still coming,” and they’ve announced a shift away from hybrid thinking models to focus on training more pure Instruct and Thinking models. This neck-and-neck, “battle of the gods” technical rivalry is pushing the open-source AI ecosystem to flourish and evolve at an unprecedented speed.

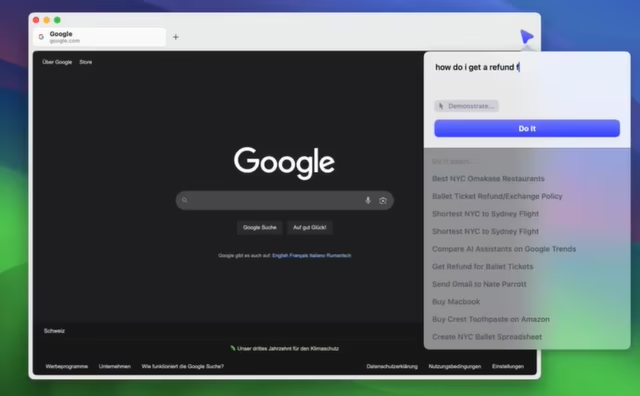

How can AI browsers innovate even further? Dia browser offers a stunning answer that’s sure to turn heads! Its upcoming new Agent Mode will introduce an AI-exclusive “Clone Mouse,” allowing AI’s operational trajectory to be completely separate from the user’s real mouse, having its own independent cursor on the screen. This means you can leisurely browse web pages or watch videos in the foreground while the AI autonomously executes a series of complex tasks like searching for information or organizing tabs in the background. Both operate without interference, doubling efficiency. This intuitive and sci-fi visual interaction not only greatly enhances the fluidity of multitasking but also sets a new, elegant benchmark for future AI-human collaboration.

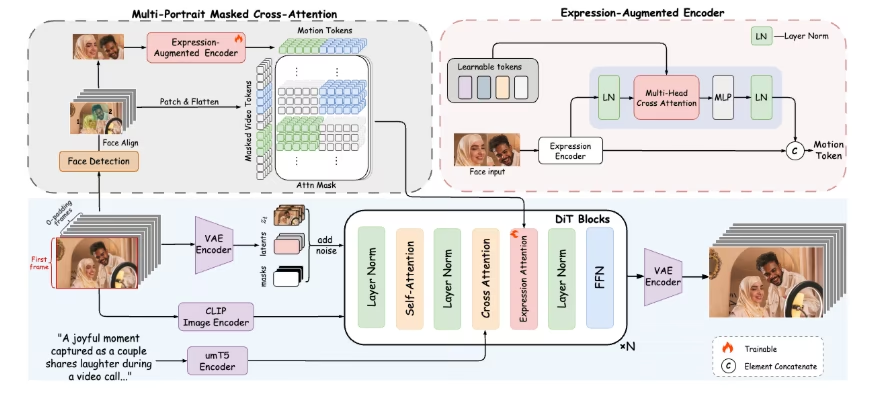

The long-standing problems of “facial paralysis” and stiff expressions in digital human animation have finally found a breakthrough solution. Alibaba and BUPT jointly launched the FantasyPortrait Project - AI News , achieving photo-realistic, high-fidelity cross-identity expression transfer through innovative Expression-Enhanced Diffusion Transformer (DiT) technology, giving digital humans vivid and natural emotions. More critically, it breakthroughly achieves independent expression control for multiple characters in multi-person scenes, completely avoiding the awkward “expression contagion” where all characters smile when one does. This technology handles not only human characters but also supports animals and audio-driven animation, promising to shine in virtual streamer and film production fields in the future. This is undoubtedly a technical highlight worth noting in this issue’s AI News.

Cutting-Edge AI Research

Robots are taking a solid step closer to becoming the “all-around household assistants” seen in sci-fi movies. ByteDance has unveiled its new Vision-Language-Action (VLA) model, GR-3. It’s like equipping robots with a smarter brain; it can understand highly abstract instructions like “clean up the dining table” and autonomously plan multi-step operations, and it also demonstrates astonishing physical interaction capabilities with deformable objects like clothes. Its core innovation lies in the clever MoT network structure and a three-in-one data training method combining real-robot demonstrations, VR teleoperation, and online text-image data. This research is considered a major milestone toward a general robot “brain” by the industry. More technical details are available on its Project Homepage - AI News and in its Technical Paper - AI News .

The astonishing capabilities of large language models come with equally astonishing computational and memory overheads, a core bottleneck that Chinese scientists are now tackling. Joint research from top institutions like the Chinese Academy of Sciences has introduced a revolutionary “slimming” solution for the attention mechanism central to large models: GTA (Grouped-head latent Attention). Through clever “group purchasing” (grouped attention) and “compression packing” (latent representation) strategies, it drastically cuts the memory-intensive KV cache by 70% while reducing computation by 62.5%! This GTA: Grouped-head latenT Attention AI News Research not only makes efficient operation of large models on edge devices like mobile phones possible but also directly doubles the speed of handling long-sequence tasks, clearing a major hurdle for the widespread adoption of AI technology.

Just as excellent language models rely on an efficient tokenizer to understand text, powerful visual generative models also heavily depend on a visual tokenizer that can “read” images. An AI News Paper: “Latent Denoising Creates Excellent Visual Tokenizers” brings profound insights. Research has found that instead of having the tokenizer directly learn how to “encode” images, it’s better to train it on a more challenging task: “denoising.” Specifically, the tokenizer is tasked with reconstructing clear original images from slightly corrupted latent embeddings. This process forces it to learn more robust and essential visual features. This seemingly simple yet extremely profound discovery provides new golden rules for designing the next generation of more powerful visual tokenizers, potentially pushing multimodal generative models to new heights of artistry and realism.

How can we teach AI to precisely operate complex graphical user interfaces (GUIs) like an experienced user? Traditional reinforcement learning methods provide “all-or-nothing” reward signals (correct click or wrong click) that are too sparse, making the AI’s learning process like searching for a needle in a haystack. An AI News Research: “GUI-G^2: Gaussian Reward Modeling for GUI Alignment” proposes a brilliant new approach. It no longer views buttons and other interface elements as mere pixels but models them as continuous Gaussian distributions. This method provides AI with richer, more dense reward signals, guiding the model steadily and accurately to find the optimal interaction location, thereby significantly enhancing AI’s robustness and generalization capabilities in GUI manipulation tasks.

AI Industry Outlook & Societal Impact

- AI is quietly becoming a “new species” in teenagers’ lives at an unimaginable speed. A recent research report from the US non-profit Common Sense Media reveals a startling phenomenon: up to 72% of American teenagers admit to having tried an AI companion at least once, with over half of them being frequent users. They use AI for a variety of purposes, from pure entertainment and curiosity to seriously seeking emotional advice and life guidance. Although the vast majority of teenagers still prioritize real-world friends, a third already find conversations with AI more satisfying than those with human friends. This profoundly reveals AI’s deep impact on shaping the next generation’s social patterns and emotional cognition, and it also poses an important question to society as a whole: How should we guide this trend to ensure its long-term societal effects are positive and healthy?

Top Open-Source Projects

NextChat - AI News (⭐84.7k): This is an AI assistant striving for ultimate lightweightness and speed. It dominates all platforms—Web, iOS, Android, Windows, Mac, and Linux—ensuring you have a consistent, fluid smart companion wherever you are, on whatever device.

crawl4ai - AI News (⭐49k): This intelligent web crawler is tailor-made for the large model era. It can intelligently crawl, parse, and process complex web content, making it your capable assistant for building knowledge bases, RAG, and other cutting-edge applications, helping your AI apps “read the entire web.”

better-auth - AI News (⭐17.3k): Hailed by the community as the most comprehensive TypeScript authentication framework, it provides a powerful, flexible, and securely reliable authentication solution for modern web applications, allowing developers to avoid reinventing the wheel and focus on core business innovation.

nn-zero-to-hero - AI News (⭐14.6k): This is a god-tier neural network introductory tutorial personally crafted by AI legend Andrej Karpathy. It’s no-nonsense, guiding you step-by-step from scratch to build and understand the mysteries of neural networks with code, helping you become a true neural network expert.

trippy - AI News (⭐5.1k): A powerful and cool modern network diagnostic tool, combining traceroute and ping functionalities. It helps developers and network engineers quickly pinpoint, diagnose, and resolve stubborn network connection issues.

blackbird (⭐3.9k): A practical OSINT (Open-Source Intelligence) reconnaissance tool. It acts like a digital world private detective, capable of searching for associated account information across hundreds of social networks using just a username or email address – super powerful.

Social Media Buzz

The AI fortune-telling industry has seemingly entered the “one-sentence development” era! One netizen demonstrated MiniMax Agent’s Jaw-dropping Capability , quickly generating a complete AI fortune-telling product with frontend, backend, login/registration, and paid membership features from just a single natural language instruction. However, another developer quickly pointedly highlighted that unless users provide their own fate-chart data, current large models still suffer from fundamental “hallucination” problems when processing underlying logic that requires precise calculations, such as Gan-Zhi (Heavenly Stems and Earthly Branches) chart generation.

An Exhibitor List for the 2025 World AI Conference sparked deep reflection in the community: why were the truly profitable AI giants largely “absent” from this grand event? Analysis suggests that the main players at trade shows are often startups in need of funding and market exposure, while “invisible champions” with stable cash flow, deeply rooted in specific industry verticals, are quietly making a fortune. The greatest value of this list might not be in telling us “who came,” but in reminding us to pay attention to “who didn’t come,” and their successful business models.

Will AI models get “dumber” with use? A blogger shared his insights , noting that the root of the problem is often not model degradation itself, but improper user “context management.” It’s like talking to a person; if you keep providing overloaded or off-topic information, they’ll also feel confused and overwhelmed. Therefore, understanding and skillfully utilizing conversation context is a key skill for ensuring AI continues to produce high-quality, highly relevant results, and it’s a must-have for future human-machine collaboration.

When humans increasingly seek direct answers from AI (e.g., “What should I wear today?”) rather than exploring the underlying knowledge (e.g., “Why is a white shirt cooler in summer?”), are we unwittingly lowering the AGI implementation threshold from the demand side ? Some argue that when human society collectively “gives up thinking” and relinquishes decision-making to AI, AI’s answers effectively become “common knowledge” and “universal truths.” This might, from another unexpected dimension, accelerate the arrival of Artificial General Intelligence.

Good news! ChatGPT Plus Users are also beginning to receive early access pushes for Agent Mode. This highly anticipated, powerful feature, which allows AI to autonomously perform multi-step tasks, is gradually expanding its coverage. The era where AI can handle your chores is getting closer.

How can AI have persistent memory instead of starting “from scratch” with every conversation? A Reddit proposal called the Lanternkin Protocol attempts to enable AI to retain memory and identity across sessions without fine-tuning the model, using clever symbolic prompts and an external text file system, as if lighting an ever-burning “memory lantern” for AI.

Are you tired of the complex drag-and-drop and configuration involved in building automation workflows? Startup Neuraan has launched a new platform aiming to revolutionize this. Users simply describe their needs in natural language, and the system automatically creates a dedicated AI Agent and invokes various tools like Gmail and CRM to complete tasks, making business process automation as simple and natural as delegating work to a clever colleague.

Finally, let’s lighten things up: How “wild” would it be if AI started commentating on the Three Kingdoms? A netizen shared an AI-generated video, where AI seriously spouts nonsense, making people laugh out loud. Looks like AI is now calling the shots on the Three Kingdoms’ chaos .

Listen to the Audio AI Daily Brief

| 🎙️ The Tavern | 📹 Douyin |

|---|---|

| The Tavern | Intelligence Hub |

|  |